Update (September 28, 2018): Reuters reports that the court has denied the government's request to force Facebook to assist with the wiretap.

Late last week, Reuters reported that Facebook is being asked to “break the encryption” in its Messenger application to assist the Justice Department in wiretapping a suspect's voice calls, and that Facebook is refusing to cooperate. The report alarmed us in light of the government’s ongoing calls for backdoors to encrypted communications, but on reflection we think it’s unlikely that Facebook is being ordered to break encryption in Messenger and that the reality is more complicated.

The wiretap order and related court proceedings arise from an investigation of the MS-13 gang in Fresno, California and is entirely under seal. So while we don’t know exactly what method for assisting with the wiretap the government is proposing Facebook use, if any, we can offer our informed speculation based on how Messenger works. This post explains our best guess(es) as to what’s going on, and why we don’t think this case should result in a landmark legal precedent on encryption.

We do fear that this is one of a series of moves by the government that would allow it to chip away at users’ security, done in a way such that the government can claim it isn’t “breaking” encryption. And while we suspect that most people don’t use Messenger for secure communications—we certainly don’t recommend it—we’re concerned that this move could be used as precedent to attack secure tools that people actually rely on.

The nitty gritty:

Messenger is Facebook’s flagship chat product, offering users the ability to exchange text messages, stickers, send files, and make voice and video calls. Unlike Signal and WhatsApp (also a Facebook product), however, Messenger is not marketed as a “secure” or encrypted means of communication. Messenger does have the option of enabling “secret” text conversations, which are end-to-end encrypted and make use of the Signal protocol (also used by WhatsApp).

However, end-to-end encryption is not an option for Messenger voice calls.

At issue here is a demand by the government that Facebook help it intercept Messenger voice calls. While Messenger’s protocol isn’t publicly documented, we believe that we have a basic understanding how it works—and how it differs from actual secure messaging platforms. But first, some necessary background on how Messenger handles non-voice communications.

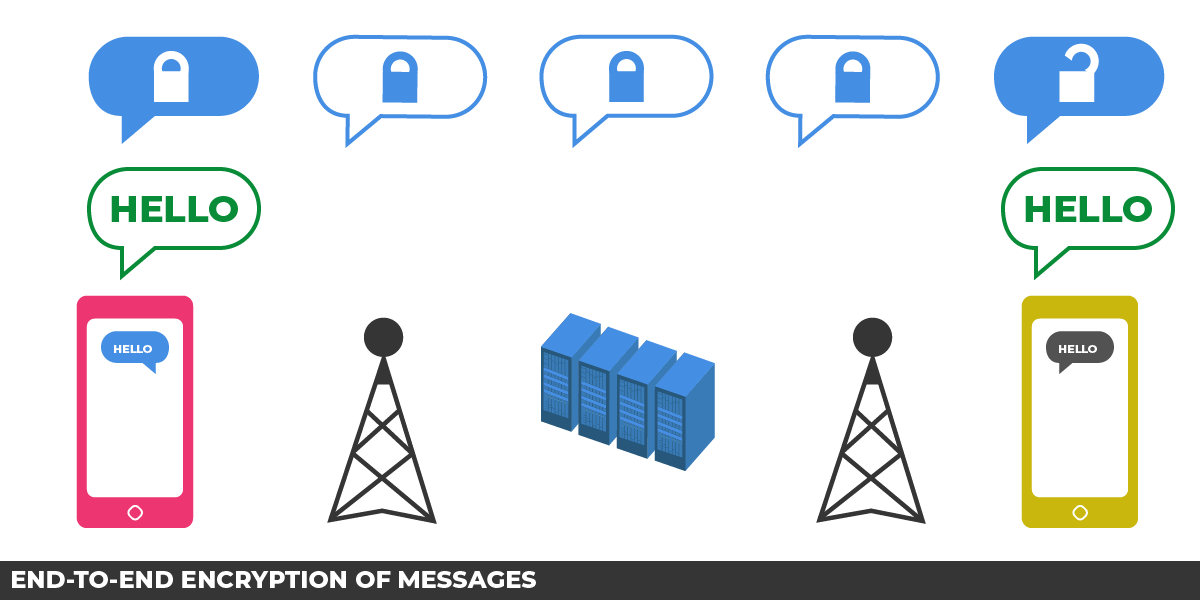

When someone uses Messenger to send a text chat to a friend, the user’s client (the app on their smartphone, for example) sends the message to Facebook’s servers, encrypted so that only Facebook can read it. Facebook then saves and logs the message, and forwards it on to the intended recipient, encrypted so that only the intended recipient can read it. When the government wants to listen in on those conversations, because Facebook sees every message before it’s delivered, the company can turn those chats over in real time (in response to a wiretap order) or turn over some amount of the user’s saved chat history (in response to a search warrant).

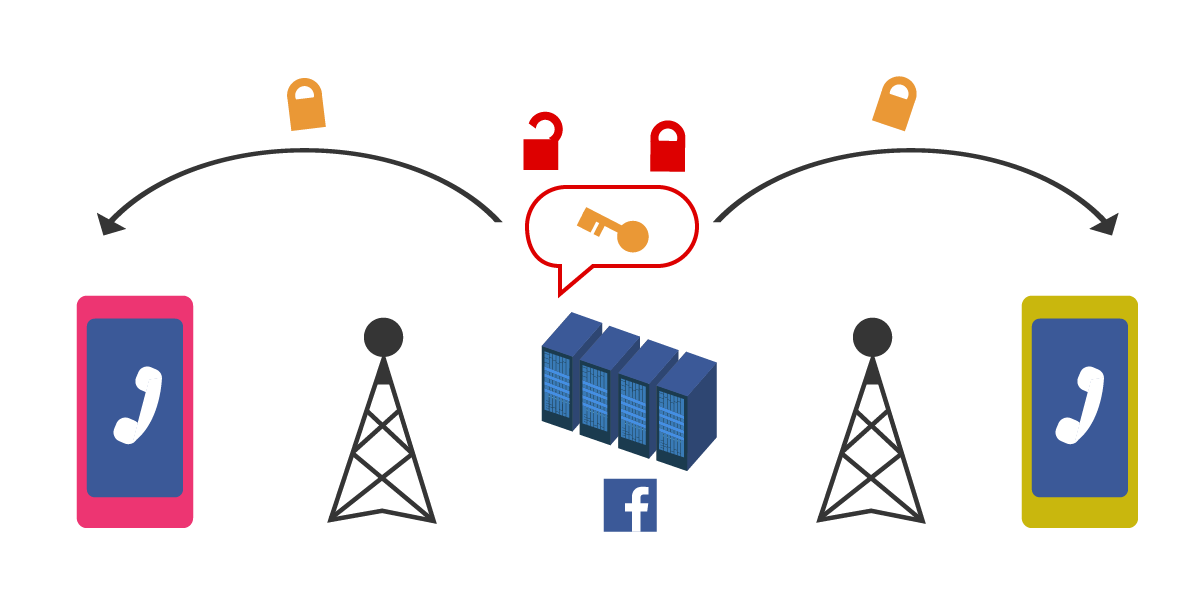

However, when someone uses Messenger to initiate a voice call, the process is different. Messenger uses a standard protocol called WebRTC for voice (and video) connections. WebRTC relies on Messenger to set up a connection between the two parties to the call that doesn’t go through Facebook’s servers. Rather—for reasons having to do with cost, efficiency, latency, and to ensure that the audio skips as little as possible—the data that makes up a Messenger voice call takes a shorter route between the two parties. That voice data is encrypted with something called the “session key” to ensure that a nosy network administrator sitting somewhere between the two parties to the call can’t listen in.

This two-step process is typical in Voice over IP (VoIP) calling applications: first the two parties each communicate with a central server which assists them in setting up a direct connection between them, and once that connection is established, the actual voice data (usually) takes the shortest route.

Step 1: A central server facilitates a key exchange between two devices. The servers cannot decrypt to see these keys.

Step 2: The session keys are then used for encrypting the call between the devices.

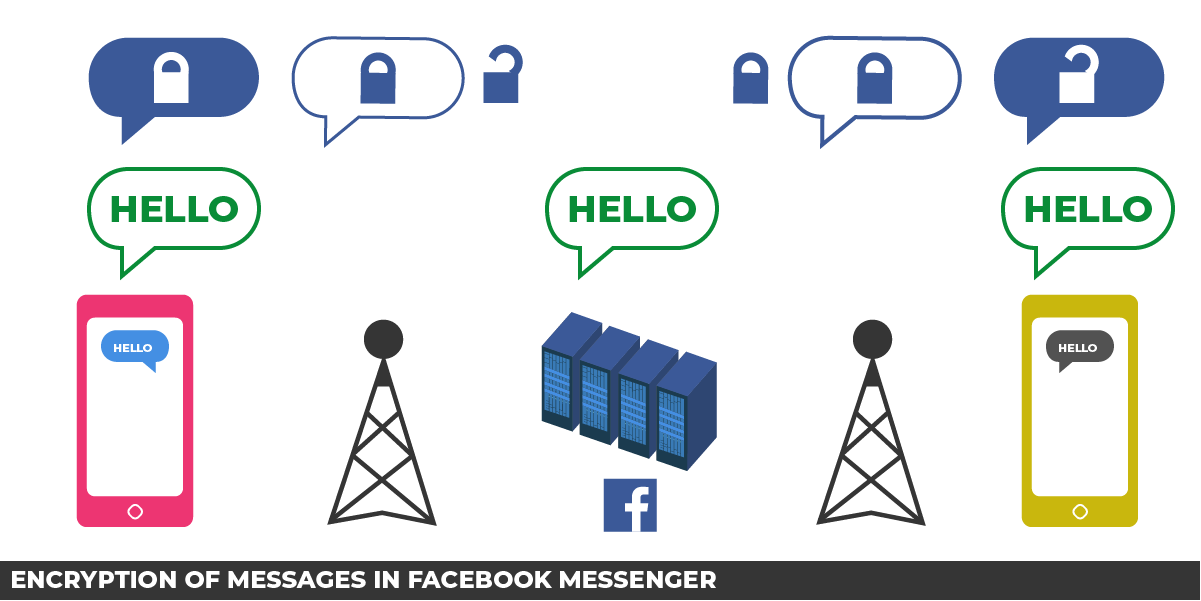

But in Messenger, some information related to the voice call does go through Facebook’s servers, especially when the call is first initiated. That data includes the session key that encrypts the voice data.

Step 1: The two devices communicate with a Facebook central server, sending their keys through the server.

Step 2: The two devices use the session keys to encrypt the call.

This differs in a major way from other secure messaging applications like Signal, WhatsApp, and iMessage. All of those apps use protocols that encrypt that initial session key—the key to the voice data—in a way that renders it unreadable by anyone other than the intended participants in the conversation.

So even though Facebook doesn’t actually have the encrypted voice data, if it did somehow have that data, we’re pretty sure that it would have the technical means to decrypt it. In other words, despite the fact that the voice data is encrypted all the way between the two callers, it’s not really what we refer to as “end-to-end encrypted” because someone other than the intended recipient of the call—in this case Facebook—could decrypt it with the session key.

So what’s at stake in this case:

Assuming our technical understanding is roughly correct, Facebook can’t currently turn over unencrypted voice communications to the government without additional engineering effort. The question is what sort of engineering would be required, and what effect it would have on user security, both within Facebook and more generally. We’ve been able to identify at least four possible ways the government might ask Facebook to assist with its wiretap:

- Force Facebook to retain the session key to the suspect’s conversation and turn it over to the government. The government would then use that key to decrypt voice data separately captured by the subject’s ISP (likely a mobile provider in this case).

- Force Facebook to construct a man-in-the-middle attack by directing the suspect’s phone to route Messenger voice data through Facebook’s servers, then capture and use the session key to decrypt the data.

- Force Facebook to push out a custom update to the suspect’s version of Messenger that would record conversations on the device and send them directly to the government.

- Demand that Facebook just figure out how to record the suspect’s conversations and turn them over—decrypted—to the government.

In broad strokes, these scenarios look similar to the showdown between Apple and the FBI in the San Bernardino case: the government compelling a tech company to alter its product to effectuate a search warrant (here a wiretap order). One obvious difference on the legal front is that the Apple case turned on the All Writs Act, whereas here the government is almost certainly relying on the technical assistance provision of the Wiretap Act, 18 U.S.C. § 2518(4). As we saw in the Apple case, the All Writs Act is a general-purpose gap-filling statute that allows the government to get orders necessary to further existing court orders, including search warrants. The Wiretap Act’s technical assistance provision is narrower and more specific, requiring communication service providers to furnish “technical assistance necessary to accomplish the interception unobtrusively and with a minimum of interference with the services.”

What are the limits of this duty to provide necessary technical assistance, and would it extend to the four possible demands we listed above? While we’re not aware of a judicial decision that’s directly on point, the Ninth Circuit Court of Appeals wrote in a well-known case interpreting this “minimum of interference” language that private companies' obligations to assist the government have “not extended to circumstances in which there is a complete disruption of a service they offer to a customer as part of their business.” And, invoking case law on the All Writs Act, the court held that an “intercept order may not impose an undue burden on a company enlisted to aid the government.”

The government could of course be expected to argue that the options above are not unreasonably burdensome and that Messenger service would not be significantly disrupted. These arguments might have some force if Facebook’s participation is limited to preserving the session key for the suspect’s conversations. After all, this information already likely passes through Facebook’s servers in a way that Facebook could choose to capture it. One unknown is to what extent Facebook sees its role in facilitating Messenger calls as ensuring the security of the calls. If, as in the Apple case, Facebook tried to make it difficult to bypass security features in the system, cooperation would potentially be quite disruptive. But the government might say that in this context Facebook is much like a webmail provider such as Gmail that uses TLS to encrypt mail between the user and Google. Google has the keys to decrypt this data, so it can comply with a wiretap. Facebook’s role isn’t exactly the same, but it certainly can obtain the session keys.

In the scenario where Facebook is being asked to push a custom update, the company might raise more forceful arguments like those made by security experts in the Apple case about the risks of undermining public trust in automatic security updates. Computer security is hard, and using a trusted channel to turn a suspect’s phone into a surveillance device could have disastrous consequences. And if the government is simply telling Facebook to “figure it out,” (option 4), Facebook might have reason to question the necessity of its assistance as well as its feasibility, since the government would not have demonstrated why other techniques would be unsuccessful in carrying out the surveillance.

All of this points to a strong need for the public to know more about what’s going on in the Fresno federal court. The Reuters article indicates that Facebook is opposing the order in some respect, and we at EFF would love the opportunity to weigh in as amicus, as we did in San Bernardino. We hope the company will do its utmost to get the court to unseal at least the legal arguments in the case. It should also ask the court to allow amicus participation on any issues involving novel or significant interpretations of the Wiretap Act or other technical assistance law.

Most important, we cannot allow the government to weaponize any ruling in this case in its larger push to undermine strong encryption and digital security.

Most important, we cannot allow the government to weaponize any ruling in this case in its larger push to undermine strong encryption and digital security. The government’s narrative has long been that there is a “middle ground,” and that companies should engage in “responsible encryption.” It loves to point to services that use TLS as examples of encrypted data that can yield to lawful court orders for plaintext. Similarly, in the San Bernardino case, the FBI did not technically ask Apple to “break the encryption” in iOS, but instead to reengineer other security features that protected that encryption. These are dangerous requests that still put users at risk, even though they don’t involve tampering with the math supporting strong encryption.

We will follow this case closely as it develops, and we’ll push buck on all efforts to undermine user security.