Mark Zuckerberg’s op-ed in the Wall Street Journal today (paywalled, but summarized here) relies on all-too-familiar refrains to explain the dubious principles and so-called “facts” behind Facebook’s business model. It’s the same old song we’ve heard before. And, as usual, it wildly misses users’ actual privacy concerns and preferences.

"Users Prefer Relevant Ads"

He starts with one of his greatest hits: “People consistently tell us that if they’re going to see ads, they want them to be relevant.” This perpetuates the ad industry’s favorite false dichotomy: either consumers can have “relevant” ads—targeted using huge collections of sensitive behavioral data—or they can be bombarded by spam for knock-off Viagra and weight-loss supplements. The truth is that ads can be made “relevant” and profitable based on the context in which they’re shown, like putting ads for outdoor gear in a nature magazine. To receive relevant ads, you do not need to submit to data brokers harvesting the entire history of everything you’ve done on and off the web and using it to build a sophisticated dossier about who you are.

Zuckerberg soothingly reassures users that “You can find out why you’re seeing an ad and change your preferences to get ads you’re interested in. And you can use our transparency tools to see every different ad an advertiser is showing to anyone else.” But a recent Pew survey on how users understand Facebook’s data collection and advertising practices, and our own efforts to disentangle Facebook’s ad preferences, tell a far different story.

Pew found that 74% of U.S. adult Facebook users didn’t even know that Facebook maintained information on their advertising interests and preferences in the first place. When Pew directed users to the ad preferences page where some of this information resides, 88% found there that Facebook had generated inferences about them, including household income level and political and ethnic “affinities.” Over a quarter of respondents said the categories “do not very or at all accurately represent them.”

It gets worse. Even when the advertising preferences Facebook had assigned to them were relevant to their real interests, users were not comfortable with the company compiling that information. As Pew reports, “about half of users (51%) say they are not very or not at all comfortable with Facebook creating this list about their interests and traits.”

So we’d like to know: on what basis does Zuckerberg claim that users—who Pew has demonstrated are overwhelming unaware of and uncomfortable with the data collection and targeting that powers Facebook’s business model—are clamoring for the kinds of “relevant” ads Facebook is providing?

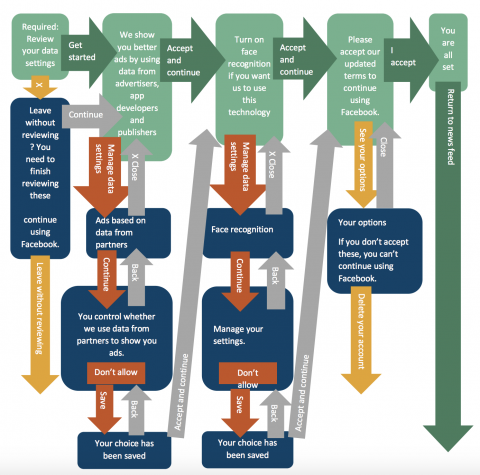

Source: https://fil.forbrukerradet.no/wp-content/uploads/2018/06/2018-06-27-deceived-by-design-final.pdf

His op-ed goes on to claim that “when we asked people for permission to use this information to improve their ads as part of our compliance with the European Union’s General Data Protection Regulation, the vast majority agreed because they prefer more relevant ads.” If Zuckerberg is referring here to the consent requests that users were prompted to click through last spring (documented in detail in this report and pictured above), then this statement is a stretch at best. Those requests were part of a convoluted process engineered to maximize the ways a user could say “yes.” In particular, it took one tap of a button to “opt in” to Facebook’s terms, but three levels of dialog to decline.

"We Don’t Sell Your Data*"

Next, Zuckerberg deploys Facebook’s favorite PR red herring: he says that Facebook does not sell your data. It may be the case that Facebook does not transfer user data to third parties in exchange for money. But there are many other ways to invade users’ privacy. For example, the company indisputably does sell access to users’ personal information in the form of targeted advertising spots. No matter how Zuckerberg slices it, Facebook’s business model revolves around monetizing your data.

Transparency is a necessary, but not sufficient, principle for Facebook to rely on here. Just knowing how you're being tracked doesn't make it less invasive. And any transparency efforts have to confront the fact that roughly half of Americans simply don't trust social media companies like Facebook to protect their data in the first place.

Saying One Thing and Lobbying Another

Zuckerberg ends his op-ed with a call for government regulation codifying the principles of “transparency, choice, and control.” But in reality, Facebook is tirelessly fighting against laws that might do just that: it is actively battling to undermine Illinois’ Biometric Information Privacy Act in court, and the Internet Association, of which Facebook is a member, has asked California legislators to weaken the California Consumer Privacy Act, and is pushing for a national law only if it “preempts” and rolls back those vital state protections.

Nearly all of Zuckerberg’s claims will be familiar to anyone who’s followed Facebook’s recent privacy issues. But Facebook users are ready for something new: policies that promote real privacy and user choice, and not just the tired excuses and non-sequiturs that Zuckerberg published today.