When Google announced its new Allo messaging app, we were initially pleased to see the company responding to long-standing consumer demand for user-friendly, secure messaging. Unfortunately, it now seems that Google's response may cause more harm than good. While Allo does expose more users to end-to-end encrypted messaging, this potential benefit is outweighed by the cost of Allo's mixed signals about what secure messaging is and how it works. This has significance for secure messaging app developers and users beyond Google or Allo: if we want to protect all users, we must make encryption our automatic, straightforward, easy-to-use status quo.

The new messaging app from Google offers two modes: a default mode, and an end-to-end encrypted “incognito” mode. The default mode features two new enhancements: Google Assistant, an AI virtual assistant that responds to queries and searches (like “What restaurants are nearby?”), and Smart Reply, which analyzes how a user texts and generates likely responses to the messages they receive. The machine learning that drives these features resides on Google’s servers and needs access to chat content to “learn” over time and personalize services. So, while this less secure mode is encrypted in transit, it is not encrypted end-to-end, giving Google access to the content of messages as they pass unencrypted through Google servers.

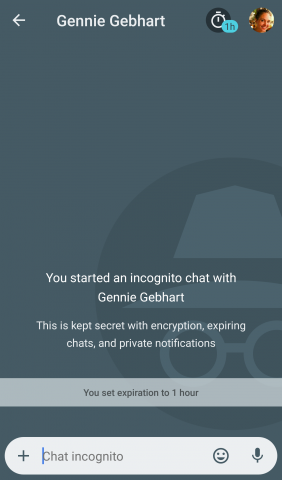

Allo’s separate “incognito” mode provides end-to-end encryption, using a darker background to distinguish it from the default mode. Messages sent in this mode are not readable on Google’s servers, and can be set to auto-delete from your phone after a certain period of time. The Assistant and Smart Reply features, which depend on Google having access to message content, don’t work in “incognito” mode.

This setup may be convenient for some users, but is ultimately dangerous for all users. Here's why.

What does “incognito” really mean?

The term "incognito" is somewhat misleading. Some users may already be familiar with it from the Google Chrome browser’s incognito mode. But “incognito” in Chrome and “incognito” in Allo refer to two dramatically different security situations.

In Chrome, incognito mode means your activity is not stored in your browser history (through pages history, stored cookies, search history, and so forth). It does not, however, change how your traffic is encrypted externally, which means that your internet service provider can still determine the websites you visit.

In Allo, activity in incognito mode is end-to-end encrypted; no one can read your messages except for you and the recipient. Incognito conversations in Allo, however, are stored on your device for a certain period of time after you send them, unlike browsing history in Chrome’s incognito mode.

Google's decision to use the same label for these two very different sets of security guarantees is likely to cause users to misunderstand and underestimate Allo’s end-to-end encryption—or, even worse, overestimate Chrome’s incognito browsing mode and expose themselves to more risk than the name “incognito” leads them to expect.

Not a fool-proof system

Mixing mixing end-to-end encryption with less secure messaging options within the same app makes dangerous mistakes possible. Although Allo differentiates between the two modes with differently colored backgrounds, the ability to maintain both default and “incognito” conversations with the same contact makes it easy to accidentally click on the wrong thread. History suggests that combining communication modes like this will lead to users inadvertently sending sensitive messages without end-to-end encryption. A messaging app that claims to be secure needs to take design steps to make this kind of mistake next to impossible; Allo makes it too easy.

Teaching the wrong lessons about encryption

Offering end-to-end encrypted messaging as a convenient, every-once-in-a-while alternative to the “default” of less secure messaging teaches users dangerous lessons about what encryption is and what is it for. Allo encourages users to encrypt when they want to send something “private” or “secret,” which we fear users will interpret as sensitive, shady, or embarrassing. And if end-to-end encryption is a feature that you only use when you want to hide or protect something, then the simple act of using it functions as a red flag: “Look here! Valuable, sensitive information worth hiding over here!”

This is important on both the individual level and the group level. For an individual, encrypting everything is among the best ways to protect your communications. If you only encrypt your texts when you are sending credit card information or scandalous sexts, then a hacker or spy knows exactly where to start digging for valuable or compromising data. Similarly, if you encrypt only when organizing a street protest or coordinating among activists, the fact that you’ve suddenly turned on encryption is potentially useful for a state adversary or anyone with passive intercept capability. If you encrypt all of your texts, however, then it is much harder for an eavesdropper to distinguish idle chit-chat from important conversations.

Encrypting all of your communications also protects others. Encryption is vital for targeted or marginalized user groups, including journalists, activists, and survivors of domestic abuse. If they are the only people who encrypt, then their communications stick out like sore end-to-end encrypted thumbs. But if everyone encrypts as their automatic default, potential surveillance targets are harder to spot.

This mass encryption protects the people who need it most. Even if you believe you have nothing to hide, encrypting your communications helps shield those who do. But by training users to use encryption as an occasional measure to protect especially sensitive communications rather than as a daily default, Allo may help users who do not need encryption at the risk of harming those who do.

More effective alternatives

Instead of mixing end-to-end encryption and less secure options in the same app, Google could offer two stand-alone apps: a less secure one offering machine learning services, and a secure one offering reliable, easy-to-use end-to-end encryption.

Alternatively, Google could offer options to make Allo itself a secure-by-default app. A prominent setting to automatically end-to-end encrypt and auto-delete all conversations at all times, for example, would give users the choice to make the app fool-proof.

This kind of security should not have to rely on user initiative, however. A more responsible messaging app would make security and privacy—not machine learning and AI—the default. We hope Google decides to accept that responsibility.