Essential Baseline

Basic Tenets

Crucial Aspects of Transparency in Government Use of AI

A Human-Rights Based Approach to Trade Secrecy and Intellectual Property

Transparency: Access to Information, Interpretability, and Explainability

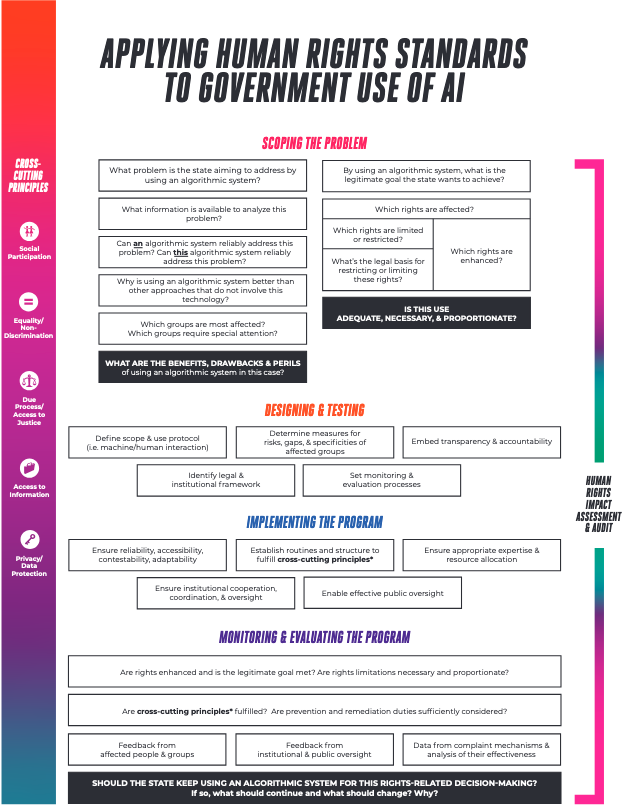

Operational Framework for Applying Inter-American Human Rights Standards

Scoping the Problem

Cross-cutting principles

Design & Testing

Implementation & Operation

Monitoring & Evaluation

Essential Baseline

The essential baseline of any adoption of AI/ADM systems by state institutions for rights-based determinations is the States’ obligation to respect human rights and fundamental freedoms. This obligation entails the duties to prevent, investigate, punish, and remedy human rights violations. The Inter-American standards and unfolding implications show a set of cross-cutting rights that apply in this context and must be considered in their interdependence, meaning that ensuring one is closely related and dependent on fulfilling the other.

One major consequence highlighted throughout the paper’s implications is that States must have the proper processes and apparatus in place to comply with such rights, including to prevent violations or provide effective remedy and reparation in case they regrettably occur. The commitments States have undertaken before the Inter-American System bind all state institutions and those acting on their behalf. Legal frameworks must adjust to such commitments and any legislation failing to abide by conventional norms demands domestic court review to establish adequate interpretation or the need for review (see more about “conventionality control” in Section 1.2).

Basic Tenets

The principles that the State is the guarantor of rights and responsible for their promotion and protection and that people and social groups are holders of rights with the capacity and right to call for these rights and participate1 are the basic tenets of any legitimate use of AI/ADM systems for state bodies' decisions affecting the recognition, enjoyment, and exercise of human rights.

This means that State action must have the promotion and protection of human rights as its compass, its underlying general goal. As such, States’ commitments before international human rights law must guide the way States organize their structure and conduct their activities. In addition, the fact that people and social groups are rights holders implies that their relationship with state institutions entails guarantees and safeguards that public bodies and officials must meet when conducting public services, social assistance, administrative or judicial adjudication, among other functions. This includes people’s power to challenge decisions that deny or arbitrarily limit their rights. That is, as rights holders, people have the capacity to demand their rights before state institutions and to participate in public decision-making. This participation is not only desirable but also an enforceable right and an obligation of the State.2 The coordination of both principles also stress that States are responsible and accountable for their decisions affecting human rights, regardless of whether they have integrated or not an AI/ADM tool in such decision-making procedures.

The implications of Inter-American Human Rights standards developed throughout this report reflect such essential baseline and basic tenets, deepening what they entail in terms of processes, structures, and safeguards. These are the foundation upon which we unfold a human-rights based operational framework, with recommendations to underpin legitimate use of AI/ADM systems by state institutions in the context of rights-based determinations.

Moreover, the implications we outline in each chapter of this report must drive the application of the operational framework as specific guidance for when related rights are or may be affected.

Crucial Aspects of Transparency in Government Use of AI

A Human-Rights Based Approach to Trade Secrecy and Intellectual Property

A vital feature of the operational framework developed in Section 5.4 is a strict and consistent human rights-based approach to any limitations on public scrutiny of AI/ADM systems’ design and functioning. The implications in the previous chapters, especially in Section 4.2, address many of them, such as national and public security. In this section, we briefly discuss business justifications around the protection of trade secrets.

AI/ADM businesses may claim trade secret rights in software algorithms and source code, and argue that independent audits and public scrutiny of their systems will violate those rights. A trade secret is economically valuable information that a business makes reasonable efforts to keep confidential.3 In some jurisdictions, trade secrets are considered to be a form of intellectual property (IP). Unlike IP such as patents, however, trade secrets by design are not publicly registered or disclosed.

Trade secrets protections can help preserve fair competition by deterring industrial or commercial espionage and breach of confidence. Yet, those protections should align with international human rights law. Similarly, although it can be controversial, and varies by jurisdiction whether trade secrets are considered a form of property, protecting them and creators’ related rights under this framing must be consistent with human rights.

The Inter-American Court has specifically addressed the right to the use and enjoyment of one’s intellectual works.4 It derives from the right to property, enshrined in Article 21 of the American Convention,5 and the right to benefit from the protection of moral and material interests derived from any scientific, literary, or artistic production of which he or she is the author (Article 14(1)(c) of the Protocol of San Salvador and Article XIII of the American Declaration of the Rights and Duties of Man).6

According to the Court, the right to use and enjoy intellectual works involves a tangible dimension – the publication, exploitation, assignment or transfer of the works and an intangible dimension – the link between the creator and their works.7 The former concerns the author’s material interests while the latter refers to their moral interests, as protected by Art. 14(1)(c) of the Protocol of San Salvador and Art. XIII of the American Declaration.

Given that Art. 14(1)(c) of the Protocol of San Salvador practically replicates Article 15(1)(c) of the International Covenant on Economic, Social and Cultural Rights (ICESCR),8 we can look to the UN Committee on Economic, Social and Cultural Rights for insight.

The UN Committee has underlined that intellectual property rights seek to encourage the active contribution of creators to the arts and sciences and to the progress of society as a whole.9 Parsing the elements of Art. 15(1)(c), the UN Committee considers that only the “author,” understood as the human creator (“whether man or woman, individual or group of individuals”) of scientific, literary, or artistic productions, can be the beneficiary of this provision. The Committee points out that although legal entities, such as corporations, may hold intellectual property rights under existing international treaty protection regimes, their entitlements are not protected at the level of human rights.10 The Inter-American Court has also referred to authors of intellectual works as natural persons.11 This is in line with Art. 1 (2) of the American Convention, which establishes that “for the purposes of this Convention, ‘person’ means every human being.”

The protection of the author’s moral and material interests must also be balanced against other human rights. The UN Committee has stressed that

“In striking this balance, the private interests of authors should not be unduly favoured and the public interest in enjoying broad access to their productions should be given due consideration. States parties should therefore ensure that their legal or other regimes for the protection of the moral and material interests resulting from one’s scientific, literary or artistic productions constitute no impediment to their ability to comply with their core obligations in relation to the rights to food, health and education, as well as to take part in cultural life and to enjoy the benefits of scientific progress and its applications, or any other right enshrined in the Covenant. Ultimately, intellectual property is a social product and has a social function.”12

In the context of government use of AI/ADM systems for rights-affecting determinations, States’ core obligations concern specific impacted rights and the cross-cutting principles, as discussed in Section 5.4. Among other duties, States must prevent discrimination and guarantee due process. Accordingly, while software algorithms deserve adequate remuneration, material interests may not block people’s ability to understand a decision affecting their rights, nor obstruct state institutions’ and society’s capacity to assess the reliability and efficacy of algorithmic systems (see Section 5.4).

Further, the UN Committee on Economic, Social and Cultural Rights has emphasized that Art. 15 (1) does not rest on a rigid distinction between the scientist and the general population.13 Rather, the UN Committee has affirmed that the right of everyone to participate in cultural life includes the right of every person to take part in scientific progress and in decisions concerning its direction.14 Similarly, enabling individuals and society to enjoy the benefits of scientific progress involves fostering broader critical scientific thinking and the dissemination of scientific knowledge.15 According to the UN Committee, “States parties should not only refrain from preventing citizen participation in scientific activities, but should actively facilitate it.”16 States should do so “particularly through a vigorous and informed democratic debate on the production and use of scientific knowledge.”17

Given the ongoing controversies related to the development and use of AI systems, that informed debate is particularly urgent. Accordingly, intellectual property rights should not hamper States’ and society’s ability to build on the best available scientific evidence to develop policies and support state decision-making on whether, and how, to adopt a certain technological solution.

These bases underpin the operational framework we detail in Section 5.4. This framework unfolds from a human rights-based approach to trade secrets and intellectual property, which ultimately articulates why authors’ and corporate interests cannot override the full set of human rights impacted by government use of AI/ADM systems—and how knowing, assessing, and understanding such systems are essential for ensuring these rights and fulfilling States’ core obligations towards them.18

Transparency: Access to Information, Interpretability, and Explainability

Step one of putting transparency commitments into practice is to inform affected individuals that decisions concerning them involve algorithmic systems. It also includes proactively disclosing which state policies or initiatives rely on AI/ADM systems for rights-affecting activities and determinations. As such, States should thoroughly disclose all AI/ADM systems in use (including by delegated third parties). Although still widely neglected, we see increasing efforts to meet this first basic step from either governments or researchers with varying degrees of detail.19

Regarding active transparency, States should as a basic first step disclose, in a systematic and user-friendly way, which AI/ADM systems are used and for which purposes. (Section 4.2)

State institutions’ proactive disclosure should also include related important information about the system, personal data processing involved, and underpinning legal framework, documentation and budget, as well as people’s rights and the means to exercise them (especially due process and data privacy related rights).

Those disclosures should also include the related legal framework, the categories of data involved, which institutions are in charge, which are the system’s developers and/or vendors, the public budget involved, reasons and documentation underpinning the adoption of the system, and all impact assessments carried out. Further, they should include performance metrics, information on the decision-making flow including human and AI agents, the rights of people affected, and the means available for review and redress. (Section 4.2; see also Section 4.5 for data processing related information)20

For example, States can organize most of this information in a public register, breaking them down by type of technology, name of the provider, state institution in charge, related program or policy, among others. Moreover, streamlined access to documentation that justifies the adoption of the system is a vital element to underscore. Related contracts, not only, but including, purchase agreements with developers/vendors, procurement procedures, meetings minutes and administrative procedures/files relating to the institution’s decision-making process as to whether or not deploy the system are all connected to public spending and must be released by default. Any limitations must be strict in scope and pass a stringent test, as detailed in Section 4.2.

Such information is crucial to prevent and tackle conflicts of interest and acts of corruption. State institutions should equally disclose related legal documents (e.g., data protection policies) and their protocols concerning the use of the system (see Section 5.4, “Design”). Still regarding States’ decision-making process as to whether (and, if so, how) to adopt AI/ADM tools for rights-based decisions, Section 5.4 emphasizes it should entail a complex, participatory, and documented process best articulated as a Human Rights Impact Assessment.

Furthermore, there is a set of complementary information that state institutions should have access to and make publicly available, ideally proactively or through information requests. They include details on the model’s training and testing datasets, the datasets the institution uses or will use to implement and validate the system, and further details on its performance and accuracy.21

These all relate to the transparency of algorithmic models, which involves the documentation of the AI model processing chain. It includes the technical principles of the model, the description of the data used for its conception, and other elements that are relevant for providing a good understanding of the model, thereby relating to interpretability and explainability goals.22

Decisions based on AI/ADM systems must have a clear, reasoned, and coherent justification. This means that systems employed for rights-based determinations must meet interpretability and explainability goals. (Section 4.4)

We can distinguish three levels of transparency of AI systems:23 1) Implementation, which refers to knowing the way the model acts on input data to output a prediction, including the technical architecture of the model – this is the standard level of transparency of most open source models. 2) Specifications, which concerns all information leading to the resulting implementation, including details on the specifications of the model (e.g., task, objectives, context), the training dataset, training procedure, model’s performances, and other elements that allows the implementation process to be reproduced – research papers often meet this level of transparency. 3) Interpretability/Explainability, which relates to enabling human understanding of underlying mechanisms of the model (e.g. the reason or logic behind an output) and the ability to demonstrate that the algorithm follows the specifications and aligns with human values.

Although the interpretability/explainability level is generally harder to achieve, especially depending on the complexity of the model, transparency at the implementation and specification levels is a matter of getting access to the respective information or having it publicly available. Although States should prioritize open source systems, a great deal of crucial information is already available if consistent documentation of the model’s design and implementation is released to the public.24 Proper documentation of the system’s specifications is also vital to allow a broader understanding of the human choices and decisions shaping the model’s operation,25 which contributes to our ability to interpret and explain the system’s decisions and predictions.

In meeting interpretability and explainability goals, we should be able to address two general categories of questions: “What are the system’s rules?” and “Why are these the rules?”26 or, in other words, “How does the system behave?” and “What justifies that the system behaves in this way?”

This combination of questions articulates both concerns related to understanding the system’s outputs and ascertaining whether its model is well justified.27 This means enabling “process-based explanations” to demonstrate that the system followed good governance processes and best practices throughout its design and use, as well as providing “outcome-based explanations.”28 The latter involves explaining the reasoning behind a specific algorithmic decision in easily understandable language according to the targeted audience. It also includes providing details about the human involvement in the decision-making process.

For achieving such goals, States adopting AI/ADM systems for rights-affecting determinations should:

- Prioritize the development, acquisition, and deployment of open source AI/ADM systems. In any case, proper assessment of the system before and during its implementation includes having access and analyzing its source code (see Section 5.4);

- Make the system’s documentation of specifications publicly available, establishing this as a prerequisite for developers and vendors to contract with state institutions;

- Adopt only models that are interpretable29 or that follow an explainability-by-design approach.30

Operational Framework for Applying Inter-American Human Rights Standards

Source: Elaborated by the authors building on the “Diagram of analysis of public policy on the basis of the contribution of the IAHRS” at IACHR, Public policy with a human rights approach, September 15, 2018, p. 50. Click on the image to view the diagram in high resolution.

Scoping the Problem

Before developing or implementing an AI/ADM system to support state action and decision-making affecting the recognition and exercise of human rights, state institutions must commit to unleashing a decision-making process as to whether or not adopt the system that begins with the question: What is the problem/issue we aim to address by using an AI/automated system as part of a rights-related decision-making? This question leads to another one, which is: What do we know about this problem?

To answer this question it is crucial to examine what are the sources of information available for conducting such analysis. This examination is two-fold: first, whether there is enough quantitative and qualitative information to carry out a situational assessment of the issue to tackle, its overall context, affected groups, and possible implications of proposed solutions; second, whether there is enough data available related to the problem to properly feed an AI/ADM system and lead to an informed and accurate outcome.31 Properly assessing the latter requires a broader understanding of the context, which connects to the first component of this analysis, so as to identify possible gaps and biases in the available data.

If the answer to this two-fold examination is negative, then the state institution lacks the appropriate conditions to reliably adopt an AI/ADM system in this context, and other approaches or previous steps must be considered instead. If the answer is affirmative, there is still a relevant set of elements to look at for establishing whether an AI/ADM system can reliably address the issue or problem, and more specifically, whether a certain technology or system already envisioned by the state institution can reliably do so.

The decision-making process described here embeds steps of an assessment that state institutions must genuinely conduct with proper documentation, transparency and social participation. It is crucial that they do so in collaboration with expert organizations, from academia and civil society, meaningfully engaging with affected groups and communities, and involving all public bodies related to the issue/problem to be addressed. This participative and coordinated analysis should take the form of a Human Rights Impact Assessment (HRIA) so that state institutions have the proper framework to identify rights enhanced and limited, as well as to establish the most appropriate approach regarding their interference with human rights.

In view of Inter-American democratic and participatory standards, this analysis cannot be a mere formality and box-checking exercise, nor a process that is confined to state offices and officials, or an analysis and social participation that are shaped so as to justify a decision already taken. By the same token, putting in place a participatory process, although essential, is not an end in itself. It is the vector for a substantive human rights analysis on whether and how the AI/ADM-based policy could proceed. This means that this process must be assessed against human rights law and standards, both procedurally and substantively, and can be contested on those grounds.

The steps and questions we describe here are not meant to exhaust the framing of this assessment, but they are all important elements that such participative analysis should include.

The implications in Chapter 2 highlight that while States’ use of AI/ADM systems can have the potential to promote conventional rights, their adoption in the context of rights-based determinations intrinsically entail at least a potential restriction or limitation to rights and freedoms, such as privacy, informational self-determination, non-discrimination, among others.

As such, the application of the three-part test should inform how institutions conduct this assessment. In this sense:

Legality principle: the assessment must carefully identify which rights are restricted or limited within such AI/ADM-supported policy or decision-making procedure. Such analysis must consider the people, groups, and communities affected, dwelling on which of them require special attention. Any State action entailing the restriction or limitation of conventional rights must be provided for by law in accordance with Inter-American standards (see Sections 2.1, 2.2, and Article 30 of the American Convention).

Legitimate goal: the State must be clear about what is the legitimate goal it aims to achieve by employing an algorithmic system as part of its rights-related decision-making. That is, the issue or problem the State seeks to address must translate into a legitimate aim that is necessary in a democratic society, according to conventional terms (see Section 2.3). From the perspective of policymaking with a human rights approach,32 it is also relevant that the State clarify how the legitimate goal consists of or relates to enhancing the protection and/or promotion of human rights.

Adequate, Necessary, and Proportionate:

Adequate: the State must have sufficient elements demonstrating that the integration of an AI/ADM system to the decision-making process is an adequate means for achieving the legitimate goal pursued. This includes showing it is conducive and can be effective in attaining the legitimate goal.

Necessary and proportionate: having fulfilled the previous steps, the State use of an AI/ADM system to support decision-making affecting rights must be necessary to achieve the legitimate aim. Being “necessary” means that this measure is not only conducive to the legitimate goal, but also is the one least harmful to human rights. If that’s the case, the analysis must continue by examining whether the measure is proportionate. This involves weighing the legitimate goal pursued and the rights it seeks to enhance vis-à-vis the rights restricted or limited in such a manner that the system and the way it integrates the decision process is proportionately calibrated. This means fine-tuning to properly strengthen the rights enhanced while interfering to the least extent possible with the limited rights. The implications elaborated on throughout this report work as a guide for this analysis and tuning. This process includes defining adequate metrics, thresholds, safeguards, and mitigation measures, with careful consideration about groups that require special attention, in particular groups that have been historically discriminated against. If there is no such proportional balance, the State cannot legitimately proceed with the adoption of the AI/ADM system.

This is a general framework considering the three-part test. Provided that the steps regarding legality and legitimate aim are properly fulfilled, the conclusion of such analysis demands further consideration. There are other important elements that we must incorporate to the analysis, that relate to answering whether the adoption of an AI/ADM system can reliably address the problem or issue. Through this combined analysis, States and society will have a roadmap to ultimately establish whether such use is adequate, necessary, and proportionate, and thereby constitute a legitimate application of AI/ADM systems by state institutions in the context of rights-based determinations.

The list of questions below articulates some of the important elements this assessment should include:33

Is an AI/ADM system fit for the intended purpose?The analysis must confront and analyze the possibility that using an AI/ADM system is not a suitable approach to address the problem. When the rights-related decision-making at issue must rely on human prudence, reasoning, or experience, and automated pattern recognition of available datasets does not have a useful role to play in informing human intervention, then an algorithmic-based system will not be fit for purpose. The same problem occurs when the aimed goal is hard to translate into mathematical variables (e.g. societal happiness) and there are no adequate measurable proxies from the available data.34 Even if some proxies could possibly work, the mathematical translation of a complex social phenomenon and the use of related proxies may not be the most suitable approach to address the issue or problem. In any case, it is essential to prevent the “formalism trap,” meaning the “failure to account for the full meaning of social concepts such as fairness, which can be procedural, contextual, and contestable, and cannot be resolved through mathematical formalisms.”35 It is also essential to consider the current status of the technology and its limitations vis-à-vis the safeguards and rights that must apply considering the purpose for which the system would be implemented (e.g. requirement of strict due process guarantees in relation to non-explainable systems).

As such, this assessment must follow human rights standards and related State obligations under human rights law. It is crucial that States refrain from adopting these systems with the sole purpose of reducing costs, as if automated systems could replace human assessment in critical decision-making. It is also imperative that state institutions do not delegate to automated systems intricate policy decisions that demand human knowledge and consideration. As we pointed out, proper human oversight should always apply in the context of government rights-related decision-making and, in any case, the State remains responsible for the decisions and actions taken on its behalf.

Therefore, this question is the opportunity to genuinely assess whether and, if so, how an AI/ADM system effectively adds to human decision-making in addressing the issue or problem. Why is using an AI/ADM system better than other approaches that do not involve this technology?

For that, it is important to build upon the information previously gathered to understand how government institutions are doing in this matter and, if so, how the implementation of algorithmic solutions is conducive to improving the situation and achieving the legitimate aim pursued. Clearly establishing what must be tackled and how success or failure are measured is key and must follow a human rights approach. Answering to this question also includes verifying whether the envisioned technology or specific system has shown effective results in other implementations and the differences and similarities of these previous experiences with the specific social context in which the State intends to apply the AI/ADM system.

Is this technology or system reliable?Two main aspects indicate that an algorithmic system is not reliable: poor performance and vulnerabilities. Poor performance means that the model does not perform well in a given task, which can lead to inaccurate, discriminatory, or otherwise harmful outcomes. As for vulnerabilities, the model performs well but has vulnerabilities that may lead to malfunctions in specific conditions, including security breaches. These malfunctions may derive from the regular execution of the software or be intentionally exploited or provoked by an adversary with malicious intentions.36 The question here is then: What assurances exist that the system performs well (including fairly) and has robust security?37

Developers and vendors of AI/ADM systems used by state institutions must provide sufficient guarantees that their systems perform well and have robust guardrails against vulnerabilities. These guarantees must include evidence that developers took the necessary steps to assess, prevent, and mitigate possible detrimental impacts to human rights, and that their systems meet proper standards of transparency, fairness, privacy, security, among other features. In turn, States must refrain from implementing AI/ADM technologies that do not provide these guarantees, which includes systems with a track record of human rights violations.

This first step is essential, but it is not enough. Systems previously subject to public scrutiny and independent auditing should have preference and may be required for consequential decisions. Independent and rigorous certification mechanisms could also play a role in this regard. Finally, States as catalysts of a participatory and meaningful impact assessment must meet their responsibilities before human rights law.

This means that state bodies must also conduct their analysis of crucial aspects of the system in collaboration with independent data scientists and civil society experts. For that, they should be able to have access to the system’s source code and executables, anonymized training datasets, and testing materials, including anonymized testing datasets. This would allow analysis of variables and proxies upon which they rely, and analysis to identify and measure any statistical biases (including omitted variable biases).38 Such analysis must consider not only the algorithmic model's operation (“why the model made the decision it did”) but also the design process (“why the model was designed that way”). The design process entails choices and trade-offs that are in effect policy decisions that will impact the system’s outcomes.39 The best way to address this analysis is through the system’s audit, which should include the collaboration with civil society experts in AI, the intersection of technology and human rights, and the field in which the state body aims to implement the system (e.g., health, social security, public security). The outcomes of this analysis should be public and integrate the broader discussion as to whether or not adopt the AI/ADM system. It is particularly important to highlight what the system is optimized for and how it calibrates fairness concerns.

Beyond the assessment of the algorithmic model and its design process, it is essential to consider how it interacts (or would interact) with its real context of application.

Can the use of this system reliably address the problem in the real world?Picking up on the first question of this list, it is important to analyze if the AI/ADM system is fit for purpose regarding the specific social context of its purported implementation. As mentioned above, the analysis should look at whether the envisioned technology or specific system has shown beneficial results in other implementations and how the social context of previous experiences resemble or differ from the reality where it would be implemented. This analysis should coordinate with the audit referred to in the previous question and integrate a broader assessment of the dynamic, disparities, and gaps in place, in addition to the potential impacts of introducing a certain AI/ADM technology with the intended purposes, in this social context.

Properly conducting this analysis involves at least two components:

First, to identify which groups are most affected, how, and which of them require special attention, analyzing both related potential impacts and necessary measures to prevent human rights violations in case the AI/ADM system is adopted. Recalling the three-step guide to policy design (see Section 4.3), such analysis includes assessing the differential impact that using this system has or might have for groups in situations of historical discrimination and the actual benefits it may bring for reducing the inequality divide impacting them. It also entails meaningfully consulting the broader community and affected groups, including the views and concerns of groups that have historically been discriminated against.

As we highlighted in the implications of Section 4.3, States must refrain from adopting AI/ADM-based decision-making in contexts it would be incompatible with human rights, such as state practices that replicate systemic discrimination and/or entail racial profiling. In this sense, States must refrain from implementing AI/ADM technologies that have disproportionate impact in vulnerable populations and/or inherently reproduce discriminatory views or practices reflected in biased datasets used to train the AI model or feed the system’s operation (see Section 4.4). State use of facial recognition and predictive policing technologies raise exactly these problems and should be rejected.

The second, and related, component is to examine how humans will interact with the algorithmic system and use its outcomes. For that, it is crucial to identify and assess whether there are efficient ways to address the human (and institutional) biases at play. Some of them reflect social problems and institutional discrimination that are entrenched and that human-machine interaction in this context would reproduce with an additional layer of complexity and opacity. When that is the case, as emphasized in the previous paragraph, moving forward is an impermissible risk to human rights. Other biases are virtually inherent to this interaction and must be properly addressed. The so-called “automation bias” deserves special attention. It refers to the human tendency to view machines as objective and inherently trustworthy. It is important to consider how this tendency could play out in the specific context of application, its potential impacts, whether mitigation measures would be efficient, and how to best ensure that human oversight and review of the systems' outcomes are accountable too.

Institutions in charge of the HRIA must properly document each one of these stages, which will be essential for addressing the next and last question.

Is this use adequate, necessary, and proportionate?If the AI/ADM system is not fit for purpose and its use cannot reliably address the problem in the real world, considering the social context of implementation, then adopting it is not an adequate measure. Moving forward will be incompatible with human rights law and, therefore, the State must look for other alternatives than AI/automated decision-making to address the problem and achieve the intended legitimate goal.

If it genuinely passes the suitability threshold, then all the elements analyzed within the HRIA will serve as an essential basis to ponder benefits, drawbacks, and perils of adopting the AI/ADM system based on the necessary and proportionate test detailed earlier in this section. Adopting the system must be the least harmful measure to achieve the legitimate aim, which involves properly addressing any perils identified with solid mitigation measures and safeguards. Fulfilling proportionality standards also demands consistent compliance with cross-cutting principles and the assurance that the State will definitively implement the system only after adequate testing and any required calibrations to make sure it meets design standards aligned to human rights obligations. We explain more about these requirements below.

Cross-cutting principles

From the initial assessment (the first HRIA) to the monitoring and evaluation stage there are cross-cutting principles that must guide State action throughout the operational framework. Each of these principles relate to rights that we detailed in this report and embody obligations and guarantees that state institutions must observe. These cross-cutting principles are:

- Social Participation. It requires giving concrete and practical meaning to the principle that people and social groups are rights holders and have the right to participate through processes and mechanisms that enable meaningful societal influence and feedback, with attention to different backgrounds, expertise, and the need to involve affected and groups in situations of historical discrimination (see Sections 3.1 and 4.1; also box “Meaningful Social Participation” in this section).

- Access to Information. It demands relying on the duties unfolding from the right to information to build in transparency and accountability across the framework’s flow. This requires a committed approach of States to effectively produce information and promote active transparency, while applying restrictions in a strict manner, i.e., only within the limits and for the period that they are actually necessary and proportionate (see Sections 3.3 and 4.2). This approach must translate into routines, structures, and resources geared to consolidate transparency practices and accountability mechanisms in how institutions assess and implement AI/ADM systems as part of their rights-related decision-making. Meeting all the other principles depend on this one being properly fulfilled (see also Section 5.3).

- Equality and Non-Discrimination. They entail giving priority protection to groups in situations of historical discrimination, adopting a gender and diversity perspective in the framework’s application. This means having a broader view, going beyond people and groups seen as “normal” or “standard” to duly consider and protect diverse bodies and identities. Doing so requires careful attention to the model's inner workings, its metrics, design process, the datasets used, the model’s interaction with human agents that operate and oversee its functioning, and to how this combination integrates and affects the social context in which the system is or will be implemented (see Section 4.3). The latter requires a deep understanding of this social context in order not to reproduce inequalities, neglect gaps, deepen exclusion, and drive injustice. For that, meaningful participation of those affected and those who understand the realities involved, especially from historically discriminated against groups, is imperative across the framework’s stages. Indicators must be designed to enable monitoring and evaluation of the impacts of the system's adoption in specific affected groups with attention to those most vulnerable or marginalized (see Sections 3.3 and 4.2). Human oversight and review of algorithmic decision-making, when properly and transparently ensured, are also critical for safeguarding this principle.

- Privacy and Data Protection. It demands providing people with robust protection, information, and powers as to how their data is processed throughout the framework’s flow and the system’s implementation. The need to safeguard dignity, private life, people’s autonomy and self-determination, including informational self-determination, permeates State use of AI/ADM systems for rights-based determinations (see Section 4.5) and so must permeate the application of this framework. These rights and guarantees are enablers of a person’s ability to freely develop their personality and life plans. Data processing must be secure, legitimate and lawful, limited to specific explicit purposes, and necessary and proportionate for fulfilling these purposes. Data subjects have a set of associated rights (e.g. access, rectification, opposition, etc.) that emphasize that people cannot be instrumentalized through the processing of their data. People have the right to understand how their data is processed to shape state bodies’ perceptions and conclusions about who they are. This postulate reinforces the need for meaningful social participation across the operational framework and for solid due process guarantees within each decision-making procedure.

- Due Process/ Access to Justice. It requires preventing arbitrary decision-making to be the mainstay of State action at all stages of this framework. This means working as a warning sign to flag when efforts to integrate AI/ADM systems into rights-related decision-making are impermissible and must stop. For example, when decisions must mainly rely on legal and human reasoning and prudence, or when repeating patterns in the available data actually perpetuate injustice. Preventing arbitrary decision-making as a cornerstone also means establishing and observing the preconditions this entails in each context. Generally, for State rights-related decision-making, this entails making justified determinations that people can understand and challenge (see Sections 4.4 and 5.3) through a meaningful, accessible, and expeditious review. Proper human oversight and review are also part of that list.

Cross-cutting principles correspond to a baseline apparatus that States must have in place when assessing and adopting AI/ADM systems for rights-based determinations. In a nutshell, meaningful social participation demands dedicated state officials, budget, processes, and planning so it’s not a mere box-checking exercise. Access to information requires routines and personnel to produce, organize, and disclose information, both actively and in response to requests, as well as an independent supervisory body with sufficient and effective powers. Equality and non-discrimination relies on the structure needed for meaningful civic participation, particularly for engaging groups that have been historically discriminated against. It also entails mobilizing diverse expert knowledge within and outside state institutions to properly assess the system and the social context of application and address potential issues. It demands ongoing monitoring of the project’s implementation, with competent and accountable human oversight of the tool and diversity and human rights-oriented production and analysis of indicators. Privacy and data protection requires security infrastructure and expertise. It also includes having an independent data protection supervisory body in addition to state departments or officials that can fulfill the role of data protection officers alongside measures and routines to timely satisfy data subjects’ rights. Finally, due process/access to justice demands easily accessible, equitable, and effective judicial and administrative remedies. It also entails proper structures to investigate and punish human rights violations resulting from state use of AI/ADM systems, ensuring reparation and non-repetition.

Having such an adequate apparatus is not secondary. It stems from States’ obligation to prevent human rights violations and their fundamental role as guarantors of human rights. The following stages of the operational framework also reflect this concern.

Design & Testing

The design stage of the operational framework indicates five areas of attention. Some of them take inspiration from the IACHR’s diagram of analysis for public policy with a human rights approach,40 which also happens in the other stages (i.e., implementation and monitoring and evaluation). We elaborate on each of these areas below:

Scope of the system’s use and related protocols (including human-machine interaction). The outcomes of the HRIA conducted in the first stage will inform the definition of the exact scope of use of the AI/ADM tool and how it will integrate the State’s policy or initiative. Necessary and proportionate standards are key in properly tailoring the scope, which includes setting the functions and tasks the system is expected to perform or contribute to within such State’s policy or initiative. Alongside this definition, establishing adequate and thorough protocols of use is vital for the legitimate adoption of the model by state officials and institutions. These protocols must be public as a rule, forming part of the body of norms regulating that State’s policy or initiative. Any impulses or intents to restrict access to information on such procedures must be faced with the prevalence of due process guarantees. As we noted in the implications of Section 4.4, the guarantees of independence and impartiality mean that people, as a general rule, know what to expect from decision-making affecting their rights. Any access limitations that jeopardize due process guarantees cross the safety line against arbitrary decision-making that the due process principle must represent in the context of this report. The protocols must address the governance, security, and operation of both the system and the data involved, as well as how the system’s outcomes integrate the state policy or initiative at issue. A crucial aspect concerns the human-machine interaction within the system’s operation. Protocols must be clear about the human oversight approach adopted,41 how it works, what are the competencies required, the oversight and review powers ensured, and the control and accountability measures applied to human intervention (or inaction), including how “automation bias” is addressed.42 Finally, the implementation of protocols of use entail properly training any officials and agents that will interact with the tool, which should include a basic training both in statistics and on the potential limits and shortcomings of the specific AI/ADM tool they will use.

Measures addressing risks, gaps, and specificities of affected groups. Building on the HRIA process and outcomes, the design stage must carefully and efficiently address risks, gaps, and specificities of affected groups so that potential rights limitations are proportionately balanced with the rights that the State aims to enhance. Both the model and related human-machine dynamics must properly respond to the outcomes of the analysis regarding the differential impact that using this system has or might have for groups historically targeted by discrimination and the actual benefits it may bring for reducing the inequality divide impacting them. The fairness metrics is an essential element of this equation and must reflect these outcomes. It is important that this and other relevant calibrations occur at this stage and throughout the system’ use. If risks, gaps, and specificities of affected groups cannot be sufficiently addressed, then the project cannot move forward.

Embedding transparency and accountability. This includes a set of issues in order to embed transparency and accountability not only in the system’s functioning, but also in the broader configuration on how it integrates the State’s policy or initiative. They all follow from the baseline that using AI/ADM does not displace States’ responsibility and accountability for integrating the system to their activities, particularly in the context of rights-affecting decisions. As a consequence, state institutions should not adopt AI/ADM tools whose outcomes and data lifecycle they are not able to explain and/or justify to the public. This unfolds from the fact that it is up to state authorities, rather than the persons affected, to demonstrate that an AI/ADM-based decision was not discriminatory or otherwise arbitrary. At the algorithm level, explainability approaches are crucial, and it is not appropriate to use technologies that include random or unexplainable rationales for decision-making that impacts human rights, as the State cannot satisfy its obligation to show a person subject to such a decision that it was not arbitrary (see Section 5.3). At the human-machine interaction level, it entails devising proper human oversight and protocols of use and control, and ensuring the conditions for them to work. At the procedural level, due process guarantees must permeate this and the previous levels, so that the implications in Section 4.4 are duly observed. They involve the existence of accessible, meaningful (including human), and expeditious review and complaint mechanisms, which points to an institutional level. Assuring that people can effectively exercise all their data-related powers stemming from informational self-determination (e.g., access, rectification, opposition, etc) also demands measures at these various levels. Regarding the institutional level, establishing important measures that arise from the cross-cutting principles require designing a strategy and ensuring the accompanying structure at least on three fronts: (i) disseminating information regarding the system, how it is used, the budget involved, the results of its implementation as part of the policy or initiative, as well as people’s related rights and how to exercise them, including the existence of review and complaint mechanisms; (ii) producing, collecting, and processing anonymized information on the system’s implementation, while making sure that data resulting from review mechanisms, complaint channels, and lawsuits are properly channeled to those implementing and assessing the AI/ADM-based policy or initiative; (iii) articulating public oversight and meaningful social participation through different mechanisms and approaches (see box “Meaningful Social Participation” in this section). The dissemination of information and indicators regarding the AI/ADM-based policy by no means can serve to expose affected people and reproduce stigmatization.

Legal and institutional framework. It must be clear what is the normative basis and the institutional scheme underpinning the implementation of the AI/ADM system as part of a State’s policy or initiative. The identification and coordination of both start in the previous stage of the operational framework as the state body or bodies in charge must spearhead the HRIA process, involving all other relevant state and non-state institutions. Compliance with the legality principle is part of this assessment, which relates to the normative basis. Yet, at the design stage, the HRIA outcomes must serve to fine-tune the institutional and regulatory framework involved. Among others, this process entails coordinating the adequate institutional structure to implement, oversee, and evaluate the policy or initiative, defining clear roles and responsibilities among institutions involved; setting strategies for streamlining relevant fixes or changes; and allocating and planning the budget required to properly implement the AI/ADM-based policy or initiative (which goes beyond the procurement or development of the tool itself to encompass the processes, personnel, and structures needed with attention to cross-cutting principles). Diversity, multidisciplinarity, and proper expertise of the people directly involved with the implementation are all key for ensuring it runs adequately. So too is devising the project’s governance to enable public oversight and meaningful social participation.

Monitoring and evaluation (M&E) processes and indicators. In close relation with embedding transparency and accountability, as well as ensuring the proper institutional framework, it is essential to design how the AI/ADM-based policy or initiative will be monitored and evaluated. Which are the relevant indicators and how institutions in charge will collect, organize, and make them publicly available. M&E processes must disaggregate indicators by gender, ethnicity, and other relevant elements of diversity, such as socioeconomic status, age, disability, etc. They must also include specific human rights indicators. The design of M&E processes must articulate the necessary routines, metrics, and channels to allow that all elements included in the M&E stage of this operational framework are properly assessed.

Having covered these five areas, the AI/ADM-based policy or initiative should be rolled out in a small pilot program to test if everything is working as planned and if the use of the AI/ADM system is an adequate and proportionate means to achieve the legitimate and stated goal before being largely implemented. This allows for comparison with a baseline control setting that should be made public to allow expert organizations and affected groups to comment on its efficacy and compliance with human rights standards.

Implementation & Operation

Having successfully completed the design stage, the state institution(s) in charge of the project can then move to its full implementation and operation. At this stage, it is critical that all areas work properly by following specifications, planning, and processes designed. Here again we outline five areas of attention:

Reliability, Accessibility, Contestability, Adaptability. It is important to ensure that the AI/ADM-based policy or initiative is reliable, accessible, contestable, and adaptable. Reliable comprises the elements we discussed above when scoping the issue, particularly when addressing the questions on whether the technology or system was reliable and whether its use could reliably address the problem in the real world. Briefly, it means performing well in the given task and having robust security. Assessing the first one requires looking at the performance of the model, the interaction between the tool and human agents involved, as well as how this system integrates with and impacts its social context of application. Reliability is also connected to the overall quality of the AI/ADM-policy or initiative in terms of fulfilling the legitimate goal and enhancing rights. Accessibility means that the policy or initiative is not exclusionary, especially that its AI/ADM component does not implicate logistical or technical barriers. There should be no obstacle of this kind for accessing the benefits of the policy or the guarantees applied to the system’s use, such as data subject’s rights and review and complaint mechanisms. Contestability requires easy and equitable access to administrative and judicial remedies that are effective and expeditious. This includes a meaningful review mechanism still at the administrative level which ensures proper and accountable human analysis. Contestability also entails that people know they are subject to a decision-making procedure and can understand the underlying logic of such a decision. Finally, it means that systems should be auditable by independent experts on behalf of persons and communities affected. Adaptability demands continuous analysis and monitoring of the system’s implementation, collecting properly anonymized data on its operation and outcomes, comparing results for progress toward the stated goal, and checking whether associated processes, structures, and guarantees are working as planned. All that must be documented and lead to the necessary adjustments.

Routines and apparatus to fulfill cross-cutting principles. Connected to adaptability, this area of attention recalls that the adequate implementation of the AI/ADM-based policy or initiative requires state institutions’ routines and apparatus capable of responding to the demands unfolding from the cross-cutting principles (see “Cross-cutting principles” above, in this section). The design stage aims at addressing gaps and structuring tasks across its five areas of attention outlined above (see “Design,” in this section). If the implementation of the AI/ADM-based policy or initiative moves forward so must be in place the proper routines and apparatus to prevent human rights violations and to properly comply with human rights standards.

Proper expertise and allocation of resources. It goes hand in hand with having the appropriate routines and apparatus to meet the cross-cutting principles and comply with human rights. Doing so demands that institutions and people involved have the required expertise to fulfill their role, from the human agents directly interacting with the tool to an oversight institution and its officials; from the personnel producing and organizing related indicators about the system’s use to those assessing complaints and collecting feedback from the affected community, just to give some examples. Mobilizing appropriate expertise should also count on independent experts from academia and civil society, having a diverse and multidisciplinary approach, through a collaboration that does not replace States’ responsibilities in this context. This means that States must allocate sufficient and maintainable resources—human and financial—to ensure that proper routines, apparatus, and expertise can put into action the HRIA-based design devised in the previous stage (see “Design,” also in this section).

Institutional cooperation, coordination, and oversight. Related to the previous areas, it highlights the importance of institutional cooperation and coordination considering the roles and responsibilities consolidated in the design stage (see “Legal and institutional framework” above). It is relevant to leverage the combined expertise of government entities to encompass data protection authorities and, as appropriate, bodies related to science and technology, education, health, justice, etc. Clear information sharing on known flaws and issues must feed such cooperation and coordination mechanisms. Moreover, a crucial piece of this institutional framework is ensuring proper independent oversight. Different arrangements are possible—it can be the data protection authority, or another authority that centralizes the oversight of government use of AI/ADM systems, or institutions in charge of this role can vary depending on the system’s context of use and field of application. In any case, it should be independent from those responsible for the system’s implementation and count on the necessary powers, expertise, and budget to fulfill its tasks. Institutional oversight can also benefit from a broader oversight ecosystem formed by public ombudsman entities (like Defensorías del Pueblo), public defenders’ offices, among others that may exist in each domestic context.

Public oversight. Institutional oversight must feed broader public oversight and vice-versa. First, it is important that institutions responsible for monitoring the system’s implementation have effective participation channels and mechanisms in place. Through them, oversight institutions can consult and receive feedback from affected people and communities as well as establish dynamics of collaboration with academia and civil society. Both institutions leading implementation and oversight must take steps to ensure that processes and mechanisms designed to disseminate information about the AI/ADM-based policy are working and properly reach the broader public as well as those directly affected (see Section 5.3 and “Embedding transparency and accountability” in this section). They must also make sure that review mechanisms and feedback processes are effective and are duly considered in the system’s implementation, for instance, to signal necessary fixes. Affected communities, civil society organizations, and press, among others, all have a role to play building on existing information, processes and mechanisms to monitor the implementation of the policy or initiative and push the institutions in charge for human-rights compliant outcomes. This includes engaging in the continuous evaluation of the system’s use by feeding institutions’ ongoing monitoring and taking part in periodical human rights impact assessments.

Meaningful Social Participation

Meaningful civic participation throughout the decision-making process as to developing, purchasing, implementing, and evaluating AI/ADM-based public policies or initiatives is an essential part of ensuring equality and non-discrimination, due process, self-determination, social protection, and —ultimately— the foundations of a democratic State. It must go hand in hand with the compliance with human rights law and standards, which participatory mechanisms must enhance, and not compromise.

Participatory mechanisms can take many and complementary forms, and must include historically discriminated against groups and affected people and communities. This operational framework seeks to articulate some mechanisms and structures. They involve meaningful and consistent engagement with affected communities, system’s audit in collaboration with independent data scientists and civil society experts, not only in technology-related fields, but also in human rights, differential impact suffered by vulnerable groups, and other specific areas concerned (e.g. health, child protection, criminal justice, etc.), broader consultation processes, feedback and complaint mechanisms, civic participation within oversight institutions, and coordination with ombudsman bodies or similar entities that advocate for the public43 as moving pieces of a continuous human rights-based evaluation of AI/ADM-supported state initiatives. Yet, there is certainly room for improvement on innovative and effective ways for participation. State actors, civil society, and academia should draw on shared knowledge about participatory methods to devise and set out more robust public engagement and oversight in this context.44

The Open Government Partnership provides some important standards to consider for accomplishing this task, such as establishing a permanent space for dialogue and collaboration; providing open, accessible, and timely information about related activities; and fostering inclusive and informed opportunities for co-creation. They rely on guiding principles that add greater substance to government commitments on transparency, inclusive participation, and accountability.45

One relevant aspect we underlined in Section 3.1 is that States must clearly specify how contributions coming from consultation and participation mechanisms inform the design, implementation, and evaluation of their use of AI/ADM systems. We also emphasized that Inter-American participatory mechanisms within the context of indigenous and Afro-descendant communities bring valuable models and lessons for meaningful participation, especially those related to previous, free, and informed consultation of affected communities. Some of them are:

- Consultation is not a single act, but a process of dialogue where clear, accessible, and complete information is provided with sufficient time to allow proper engagement;46

- Consultation in good faith requires the absence of any type of coercion and must go beyond merely pro forma procedures;47

- Failure to pay due regard to the consultation's results is contrary to the principle of good faith;48

- Decisions resulting from the consultation process are subject to higher administrative and judicial authorities, through adequate and effective procedures, to evaluate their validity, pertinence, and the balance between rights and interests at stake.49

In this sense, we should recall the UN Committee on ESC rights’ formulation on the right of every person to take part in scientific progress and in decisions concerning its direction (see Section 5.3). State use of algorithmic systems for rights-affecting determinations should not disregard these guarantees.

Monitoring & Evaluation (M&E)

Ongoing monitoring of the AI/ADM-based policy or initiative, including the system's functioning, must take place alongside implementation and operation. It puts into action processes and indicators devised and coordinated in the design stage (see “Monitoring & evaluation processes and indicators”), which should include periodical audits and human rights impact assessments (HRIAs). As in the scoping stage, recurring HRIAs should incorporate the outcomes of thorough audits to articulate a broader and participatory analysis grounded in human rights standards. As such, there is a set of questions that the first HRIA after the system’s implementation, as well as the following ones, should address in analyzing whether the state institution should continue using the AI/ADM system and, if so, how. The questions below are not exhaustive, but are all relevant for this analysis.

- Are rights enhanced and the legitimate goal being satisfied?

- Is the use of the system playing a role to bridge inequality divides identified?

- Are rights limitations necessary and proportionate?

- Are any potential differential impacts adequately addressed?

- Is the system performing well and in an accountable manner?

- Is human oversight adequately fulfilling its role?

- Is data processing legitimate, proportionate, and secure? Is the system protected against vulnerabilities?

- Are cross-cutting principles properly fulfilled?

- Are review and complaint mechanisms meaningful, accessible, and effective? Are they reliably feeding M&E processes and adjustments in the implementation?

- Do M&E indicators reliably capture the social context of application and affected communities? Are they properly disaggregated considering historically discriminated and vulnerable groups, especially those that require special attention in the context of the policy or initiative?

- Is information about the AI/ADM-based policy or initiative, including the algorithmic system, duly provided to affected people and the public? (see Section 5.3)

- Are the means to exercise data subject’s rights ensured in an easy, timely, and complete manner?

- Is state apparatus sufficiently equipped and coordinated to carry on this AI/ADM-based policy or initiative in compliance with human rights?

- Are routines, processes, and institutional structures enabling public oversight and meaningful social participation, including from historically discriminated against groups?

- Are States’ prevention and remediation duties adequately addressed?

Responding to these questions entails having consistent information-sharing about the system’s performance, flaws, and issues, how it integrates the policy or initiative, and the results of the policy or initiative so far. It also involves the committed and competent work of oversight institutions. The assessment must rely on meaningful feedback from affected people through polls, consultations, or other appropriate instruments, with attention to historically discriminated against groups. Anonymized data from complaint mechanisms and administrative and judicial challenges are also a vital input for the HRIA process. Finally, institutions leading the assessment must provide substantial means for participation of communities, experts, academia, and civil society organizations.

Culminating the analysis and based on the questions above, the M&E and HRIA should lastly assess:

Should the State keep using an/this AI/ADM system? If so, what should continue, what should change. Why?

The implications developed throughout this report (see "standard's implications" ) provide specific guidance that States must consider when conducting the HRIA and other M&E processes. It is crucial that institutions in charge make clear how they examined the relevant issues, including how they analyzed and incorporated inputs coming from feedback and participation mechanisms. Diversity, multidisciplinarity, and proper expertise of people responsible are all key for enabling meaningful M&E processes and the HRIA. The assessment must be properly documented and state institutions must disseminate information on the outcomes of the evaluation, including by making the HRIA report publicly available.

Notes

-

IACHR, Public Policy with a Human Rights Approach, para. 44. ↩

-

IACHR, Public Policy with a Human Rights Approach, para. 56 (emphasis added). ↩

-

See an overview at <https://www.law.cornell.edu/wex/trade_secret>. ↩

-

See Case of Palamara-Iribarne v. Chile, Merits, Reparations and Costs, Judgment of November 22, 2005. ↩

-

“Art. 21. Right to Property. 1. Everyone has the right to the use and enjoyment of his property. The law may subordinate such use and enjoyment to the interest of society. 2. No one shall be deprived of his property except upon payment of just compensation, for reasons of public utility or social interest, and in the cases and according to the forms established by law. 3. Usury and any other form of exploitation of man by man shall be prohibited by law.” ↩

-

“Art. 14. Right to the benefits of culture. 1. The States Parties to this Protocol recognize the right of everyone: [...] (c) to benefit from the protection of moral and material interests deriving from any scientific, literary or artistic production of which he is the author; Art. XIII of the American Declaration of the Rights and Duties of Man. Every person has the right [...] to the protection of his moral and material interests as regards his inventions or any literary, scientific or artistic works of which he is the author.” ↩

-

Case of Palamara-Iribarne v. Chile. ↩

-

See United Nations, Committee on Economic, Social and Cultural Rights, General Comment No. 17 (2005): The Right of Everyone to Benefit from the Protection of the Moral and Material Interests Resulting from any Scientific, Literary or Artistic Production of which he or she is the Author (Article 15, Paragraph 1 (c), of the Covenant), E/C.12/GC/17, January 12, 2006. ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General Comment No. 17 (2005), para. 4. ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General Comment No. 17 (2005), para. 7. ↩

-

“Thus, within the broad concept of ‘assets’ whose use and enjoyment are protected by the Convention are also the works resulting from the intellectual creation of a person, who, as the author of such works, acquires thereupon the property rights related to the use and enjoyment thereof.” Case of Palamara-Iribarne v. Chile, para. 102. ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General Comment No. 17 (2005), para. 35 (emphasis added). ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General comment No. 25 (2020) on Science and Economic, Social and Cultural rights (Article 15 (1) (b), (2), (3) and (4) of the International Covenant on Economic, Social and Cultural Rights), E/C.12/GC/25, April 30, 2020, para. 9. ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General Comment n. 25 (2020), para. 10. The right of everyone to take part in cultural life is established in Art. 15(1)(a) of the ICESCR and in Art. 14(1)(a) of the Protocol of San Salvador. ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General Comment n. 25 (2020), para. 10. ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General Comment n. 25 (2020), para. 10. ↩

-

United Nations, Committee on Economic, Social and Cultural Rights, General Comment n. 25 (2020), para. 54. ↩

-

This understanding corroborates what we point out in Section 4.2. ↩

-

The Chilean Repositorio Algoritmos Públicos, linked to Gob_Lab at the Adolfo Ibañez University, available at <https://algoritmospublicos.cl/repositorio>. The UK Tracking Automated Government (TAG) Register, developed by the Public Law Project, available at <https://trackautomatedgovernment.shinyapps.io/register/>. The Canadian TAG register, held by the Starling Centre, available at <https://tagcanada.shinyapps.io/register/>. The City of Amsterdam Algorithm Register, available at <https://algoritmeregister.amsterdam.nl/en/ai-register/>. The U.S. AI Use Case Inventory, available at <https://www.dhs.gov/data/AI_inventory>. ↩

-

In regard to privacy and data processing related information, we call specific attention to the following implications in section 4.2 and 4.5, respectively: “When it comes to AI/ADM systems deployed for surveillance purposes, including in the context of national security, people should be informed, at a minimum, about the legal framework regulating these practices; the bodies authorized to use such systems; oversight institutions; procedures for authorizing the system's use, selecting targets, processing data, and establishing the duration of surveillance; protocols for sharing, storing, and destroying intercepted material; and general statistics regarding these activities.” And “[f]ree and informed consent require providing data subjects with sufficient information about the details of the data to be collected, the manner of its collection, the purposes for which it will be used and the possibility, if any, of its disclosure; the individual should also express their willingness in such a way that there is no doubt about their intention. In short, the data subject should have the ability to exercise a real choice and there should be no risk of deception, intimidation, coercion or significant negative consequences to the individual from refusal to consent.” ↩

-

Inspired by Julia Stoyanovich’s point that “algorithmic transparency requires data transparency.” The author complements that data transparency is not synonymous with making all data public, noting that it is also important to release “data selection, collection and pre-processing methodologies; data provenance and quality information; known sources of bias; privacy-preserving statistical summaries of the data.” Stoyanovich, J. (n.d.). Interpretability, DS-GA 3001.009: Responsible Data Science, pp. 21-22. ↩

-

Hamon, R., Junklewitz, H., & Sanchez, I. (2020). Robustness and Explainability of Artificial Intelligence: From Technical to Policy Solutions. Publications Office of the European Union, p. 2. ↩

-

The description of these three levels is mostly taken from Hamon, R., Junklewitz, H., & Sanchez, I. (2020). ↩

-

For a template to document the training dataset, see Gebru, T.; Morgenstern, J., Vecchione, B., Wortman, J. V., Wallach, H., Daumé III, H., & Crawford, K. (2021). Datasheets for Datasets. arXiv. In turn, a template focused on the model can be found in Mitchell, M., Wu, S., Zaldivar, A., Barnes, P., Vasserman, L., Hutchinson, B.; Spitzer, E., Raji, I. D., & Gebru, T. (2019) Model Cards for Model Reporting. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Association for Computing Machinery (ACM). This template inspired the elaboration of a Transparency Sheet (Ficha de transparencia) for government algorithmic systems by the Chilean Adolfo Ibañez University’s Gob_Lab. The template of the transparency sheet is available at <https://herramienta-transparencia-goblab-uai.streamlit.app/>. ↩

-

Foryciarz, A., Leufer, D., & Szymielewicz, K. (2020). Black-Boxed Politics: Opacity is a Choice. In AI Systems, Towards Data Science. Human decisions that shape an AI system include: setting the main objective; eliciting values and preferences; choosing the most important outcome; selecting a dataset; choosing a prediction method [a model]; testing and calibrating the system; and updating the model. ↩

-

Selbst and Barocas pose and develop these questions in Selbst, A., & Barocas, S. (2018). The Intuitive Appeal of Explainable Machines. Fordham Law Review, v. 87. ↩

-

Selbst, A., & Barocas, S. (2018), p. 1129. ↩

-

UK Information Commissioner’s Office (ICO) & The Alan Turing Institute. (October 17, 2022). Explaining decisions made with AI, p. 23. The document articulates different focuses for explanations regarding AI systems based on a set of important elements organized in the following categories: rationale, responsible actors, data processing, fairness, safety and performance, and impact mitigation and monitoring. We should also note that “process-” and “outcome-based explanation” relates to the two interpretability approaches that the Robustness and Explainability of Artificial Intelligence report briefly points out and explain as “global interpretability setup” and “providing an explanation for a single prediction made by the system.” Hamon, R., Junklewitz, H., & Sanchez, I. (2020), pp. 12-13. ↩

-

Rudin, C. (2019). Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 1, 206-215. See also Hamon, R., Junklewitz, H., & Sanchez, I. (2020), p. 13 (Section 3.2.4 Interpretable models vs. post-hoc interpretability). ↩

-

Hamon, R., Junklewitz, H., & Sanchez, I. (2020), p. 24. ↩

-

See Williams, J., & Gunn, L. (May 7, 2018). Can the available data actually lead to a good outcome? In Math Can’t Solve Everything: Questions We Need To Be Asking Before Deciding an Algorithm is the Answer. Electronic Frontier Foundation. ↩

-

IACHR, Public Policy with a Human Rights Approach. ↩

-

The comments to the questions below take into account other EFF's resources related to the topic, particularly Lacambra, S. (2018). Artificial Intelligence and Algorithmic Tools. A Policy Guide for Judges and Judicial Officers. Electronic Frontier Foundation. ↩

-

Proxy is a variable that is not in itself directly relevant, but that serves in place of an unobservable or immeasurable variable. ↩

-

See Selbst, A. D., Boyd, D., Sorelle, A. F., Venkatasubramanian, S., & Vertesi, J. (2019). Fairness and Abstraction in Sociotechnical Systems. In Proceedings of the Conference on Fairness, Accountability, and Transparency (FAT* '19). Association for Computing Machinery (ACM), New York, NY, USA, 59-68. https://doi.org/10.1145/3287560.3287598 ↩

-

Hamon, R., Junklewitz, H., & Sanchez, I. (2020), p. 14. ↩

-